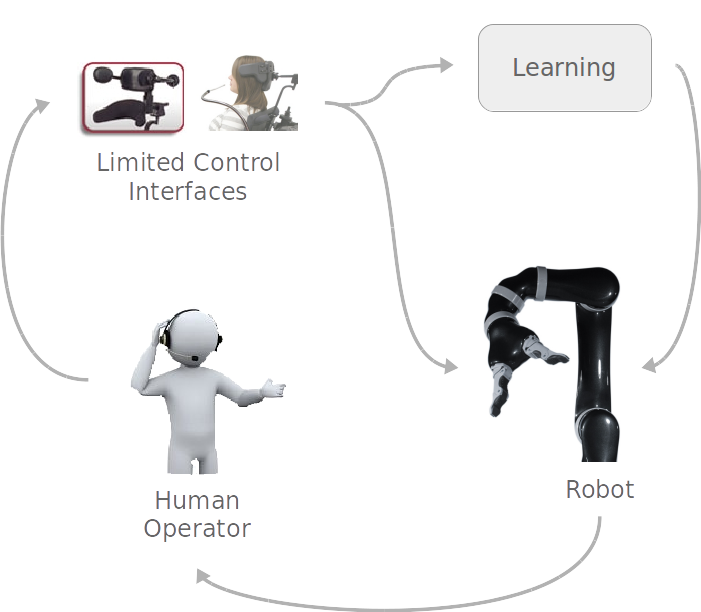

Robots which do not adapt to the variable needs of their users when providing physical assistance will struggle to achieve widespread adoption and acceptance. Not only are the physical abilities of the user very non-static—and therefore also is their desired or needed amount of assistance—but how the user operates the robot too will change over time.

The fact that there is always a human in the loop offers an opportunity: to learn from the human, transforming into a problem of robot learning from human teachers [2]. Which raises a significant question: how will the machine learning algorithm behave when being instructed by teachers who not only are not machine learning or robotics experts, but moreover have motor impairments that influence the learning signals which are provided?

There has been limited study of robot learning from non-experts, and the domain of motor-impaired teachers is even more challenging: their teleoperation signals are noisy (due to artifacts in the motor signal left by the impairment) and sparse (because providing motor commands is more effort with an impairment), and filtered through a control interface [12].

Rather than treat these constraints as limitations, we hypothesize that such constraints can become advantageous for machine learning algorithms that exploit unique characteristics (like problem-space sparsity) of the control and feedback signals provided by motor-impaired humans. Our work on this project will contribute algorithmic approaches tailored specifically to the unique constraints of learning from motor-impaired users, and an evaluation of these algorithms in use by end-users.

As a first step, we are performing large-scale studies of human teleoperation of assistive machines using a variety of interfaces. To characterize interface usage is a part of these studies, for which we developed an open-source interface assessment package, a first of its kind for assistive robotics [34, 38, 54]. A study with spinal cord injured and uninjured participants found statistically significant differences across multiple metrics when different control interfaces were in use by the same human performing the same task [36]. We also investigated the impact of control interface on features of heart rate variability, which have been used as measures of cognitive load [49].

Our follow-on work now explicitly models physical interface activation, rather than treat this as a black box, and thus allows for potential discrepancies between intended and measured interface commands [46].

Funding Source: National Science Foundation (NSF/RI-1552706 CAREER: Robot Learning from Motor-Impaired Teachers and Task Partners)