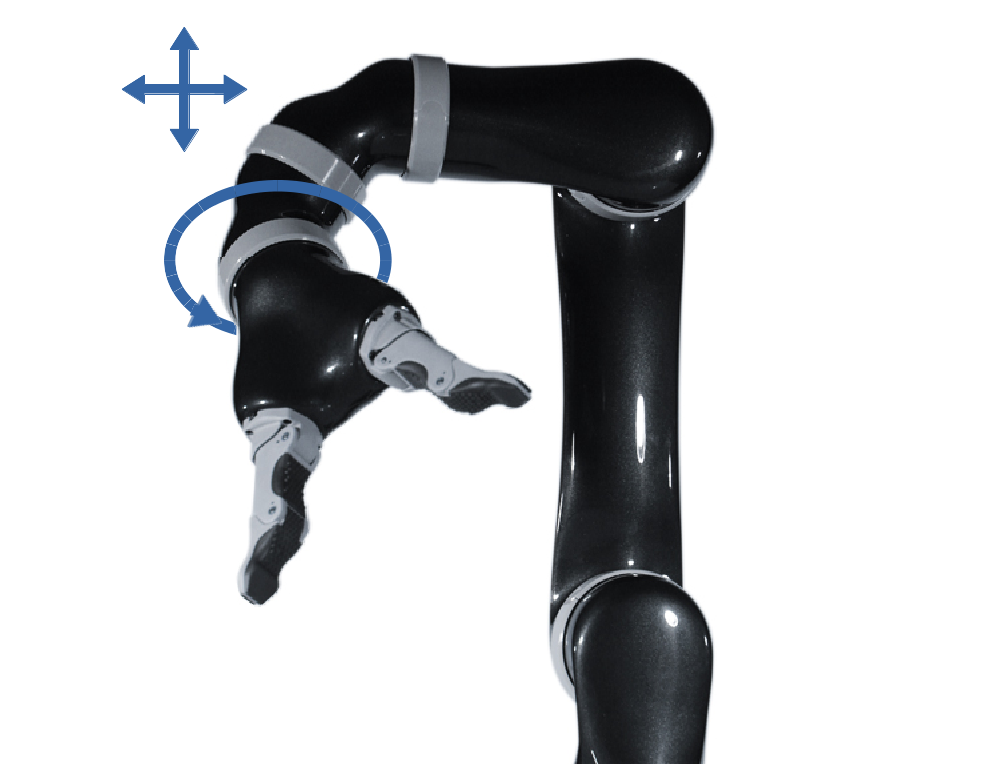

When the dimensionality of control signals issued by a human is smaller than the controllable degree-of-freedom (DoF) of the robot, often the control space is partitioned such that the human operates on a subset of the robot’s DoF at a given time (called a control mode). While one way for autonomy to play a role is to blend with the user’s commands and bridge the dimensionality gap, another way is to anticipate when to switch between control modes.

This project explores assistance in the form of the robot autonomy anticipating and performing for the user switches between lower dimensional control modes. The exact nature of this assistance may be customized to individual users. Our work has introduced the idea of performing automated mode switching with the specific aim of disambiguating user intent [26, 42]. The general idea is one of “help me to help you”, where the human teleoperates the robot in a subspace of the robot control within which movement provides the clearest indication of the human’s goal, so that the autonomy is able to step in and provide control assistance sooner and with greater accuracy. The autonomy identifies that disambiguating subspace, and selects the control mode which operates it. We also have developed a framework for modeling human operation of the control interface during robot teleoperation and, critically, explicitly acknowledging differences between intended and issued control commands [46].

Funding Source: National Science Foundation (NSF/CPS-1544797)