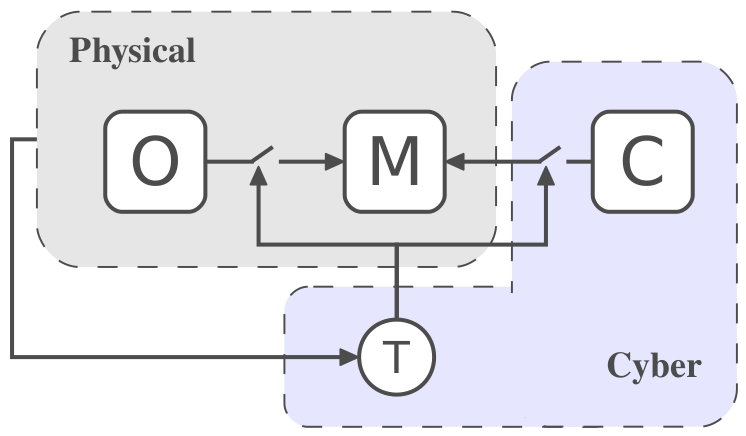

How much should a person be allowed to interact with a controlled machine?

The aim of this project is to balance the ability of a person to direct a cyber-physical system and the system’s representation of its own capabilities and limitations. We have developed a science of trust, that bridges human operator capabilities and physical system safety.

We focus specifically on the case of robot motion that is mutually controlled by a human and an autonomous system [1,8,10]. A formal measure of trust in the operator is computed, which decides how much control to cede to the operator during physical correction or mutual control [21].

Our work on this project also has jointly modelled the human-robot system dynamics using spectral methods that learn from data gathered during human teleoperation of the machine [27, 42]. These data-driven models then have been used within shared-control paradigms that provide task-agnostic assistance that injects control only for safety [32], as well as that utilize stochastic sampling to minimally intervene with the human’s control signal [35]. (Also presented in the PhD dissertation of Alexander Broad.)

Funding Source: National Science Foundation (NSF/CNS-1329891 and NSF/CNS-1837515). In collaboration with Todd Murphey, Mechanical Engineering, Northwestern University.